Small changes to how AI large language models (LLMs) are built and used can dramatically reduce energy consumption without compromising performance, according to new research published by the UN’s science and cultural organisation UNESCO and UK university UCL.

The report advocates for a pivot away from resource-heavy AI models in favour of more compact models.

Used together, these measures can reduce energy consumption by up to 90%, concludes the research.

UNESCO has a mandate to support its 194 Member States in their digital transformations, providing them with insights to develop energy-efficient, ethical and sustainable AI policies.

In 2021, the organisation’s Member States adopted the UNESCO Recommendation on the Ethics of AI, a governance framework which includes a policy-oriented chapter on AI’s impact on the environment and ecosystems.

Its new report, “Smarter, Smaller Stronger, Resource Efficient AI and the Future of Digital Transformation” calls on governments and industry to invest in sustainable AI research and development, as well as AI literacy, to empower users to better understand the environmental impact of their AI use.

Generative AI tools are now used by over 1bn people daily. Each interaction consumes energy – about 0.34 watt-hours per prompt. This adds up to 310 gigawatt-hours per year, equivalent to the annual electricity use of over 3m people in a low-income African country.

For the report, a team of computer scientists at UCL carried out a series of original experiments on a range of different open-source LLMs. They identified three innovations which enable substantial energy savings, without compromising the accuracy of the results.

Firstly, they concluded that small models tailored to specific tasks can cut energy use by up to 90%. Currently, users rely on large, general-purpose models for all their needs. The research shows that using smaller models tailored to specific tasks—like translation or summarisation—can cut energy use significantly without losing performance.

The report also says that developers have a role to play in the design process: the so-called ‘mixture of experts’ model is an on-demand system incorporating many smaller, specialised models. Each model – for example, a summarising model or a translation model – is only activated when needed to accomplish a specific task.

Secondly, the report found that shorter, more concise prompts and responses can also reduce energy use by over 50%.

Thirdly, model compression can save up to 44% in energy. Reducing the size of models through techniques such as quantisation helps them use less energy while maintaining accuracy.

Meanwhile, the report concluded that small models are more accessible. Most AI infrastructure is currently concentrated in high-income countries, leaving others behind and deepening global inequalities. According to the ITU, only 5% of Africa’s AI talent has access to the computing power needed to build or use generative AI. The three techniques explored in the report are particularly useful in low-resource settings, where energy and water are scarce; small models are much more accessible in low-resource environments with limited connectivity.

“Generative AI’s annual energy footprint is already equivalent to that of a low-income country, and it is growing exponentially. To make AI more sustainable, we need a paradigm shift in how we use it, and we must educate consumers about what they can do to reduce their environmental impact,” said Tawfik Jelassi, Assistant Director-General for Communication and Information at UNESCO.

.jpg)

BSC Expo: “AI is a real b*****d to work with”

The annual British Society of Cinematographers (BSC) Expo returned to the Evolution Centre in Battersea, London, this weekend, attracting key professionals across the production industry with its packed seminar schedule and frank discussions on the future of the film industry.

John Gore Studios acquires AI production specialist Deep Fusion

UK-based film and TV group John Gore Studios has acquired AI specialist production company Deep Fusion Films.

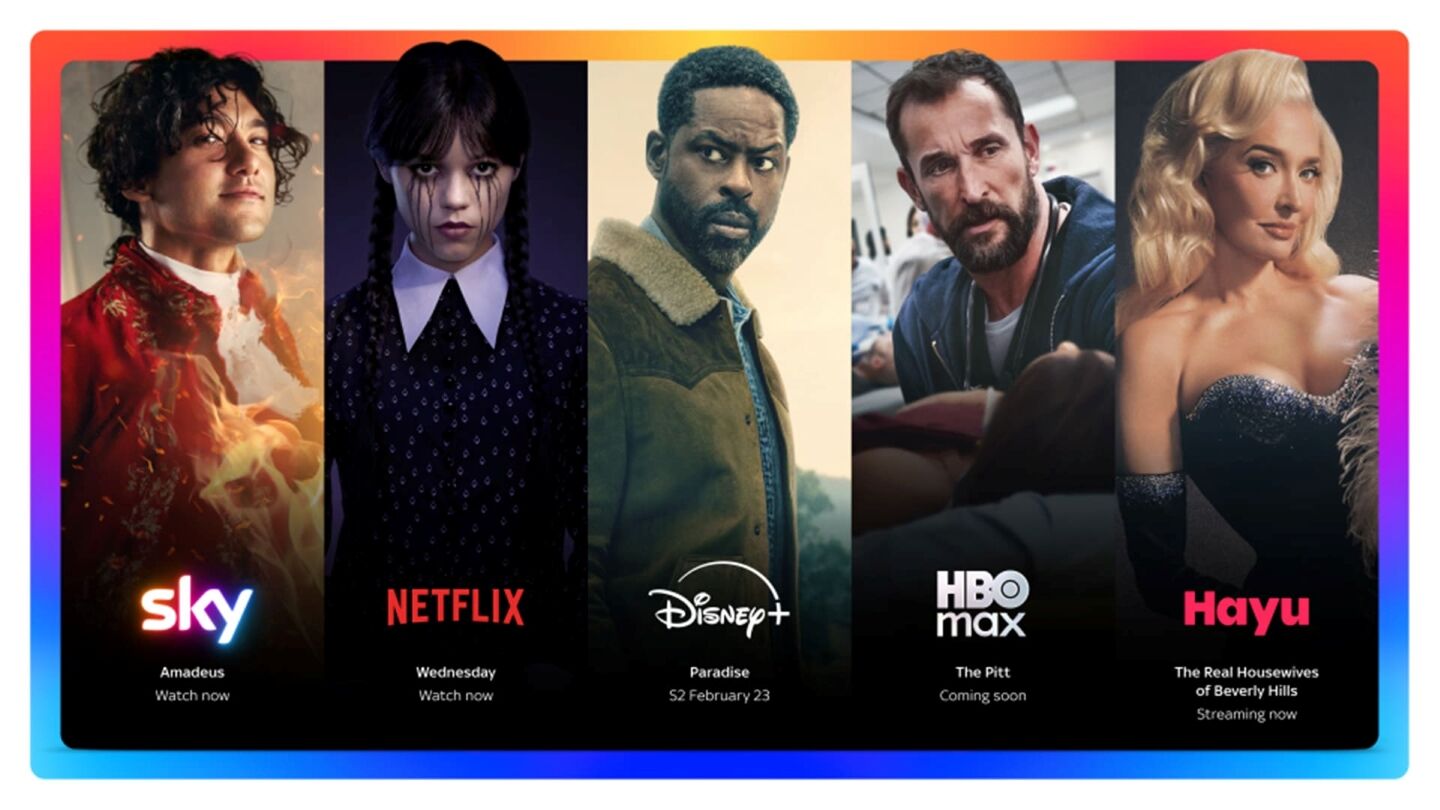

Sky to offer Netflix, Disney+, HBO Max, and Hayu in one subscription

Sky has announced "world-first" plans to bring together several leading streaming platforms as part a single TV subscription package.

Creative UK names Emily Cloke as Chief Executive

Creative UK has appointed former diplomat Emily Cloke as its new Chief Executive.

Rise launches Elevate programme for broadcast leaders

Rise has launched the Elevate programme, a six-week leadership course designed to fast-track the careers of mid-level women working across broadcast media technology.