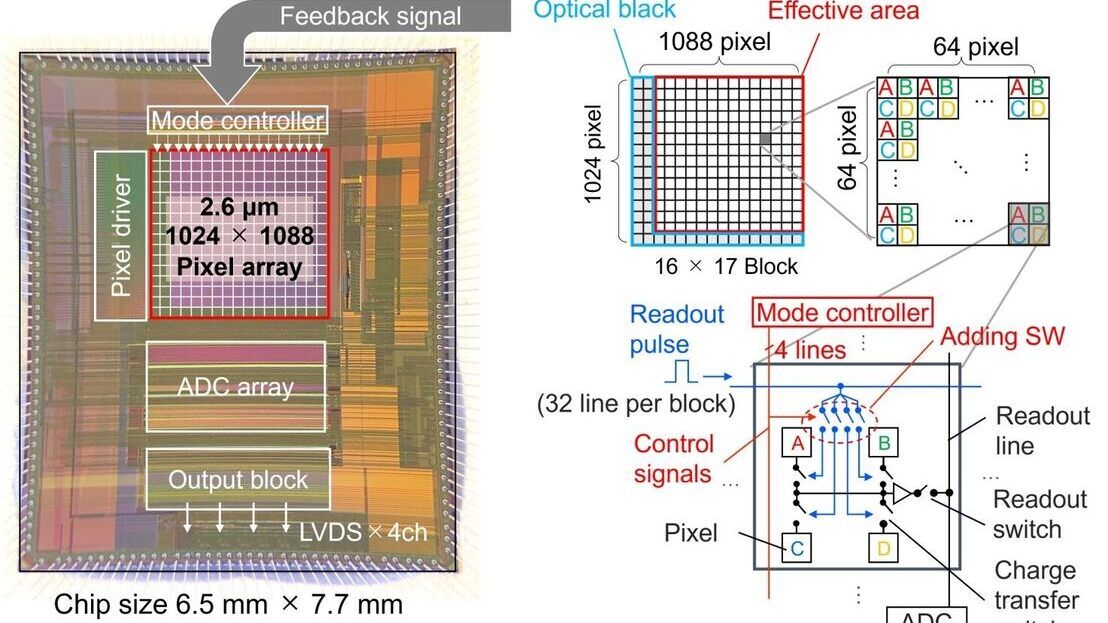

This paper describes a novel scene-adaptive imaging technology designed to enhance the image quality of wide-angle immersive videos such as 360-degree videos. It addresses the challenge of balancing resolution, frame rate, and dynamic range due to sensor limitations by dynamically adjusting shooting conditions within a single frame on the basis local subject characteristics.

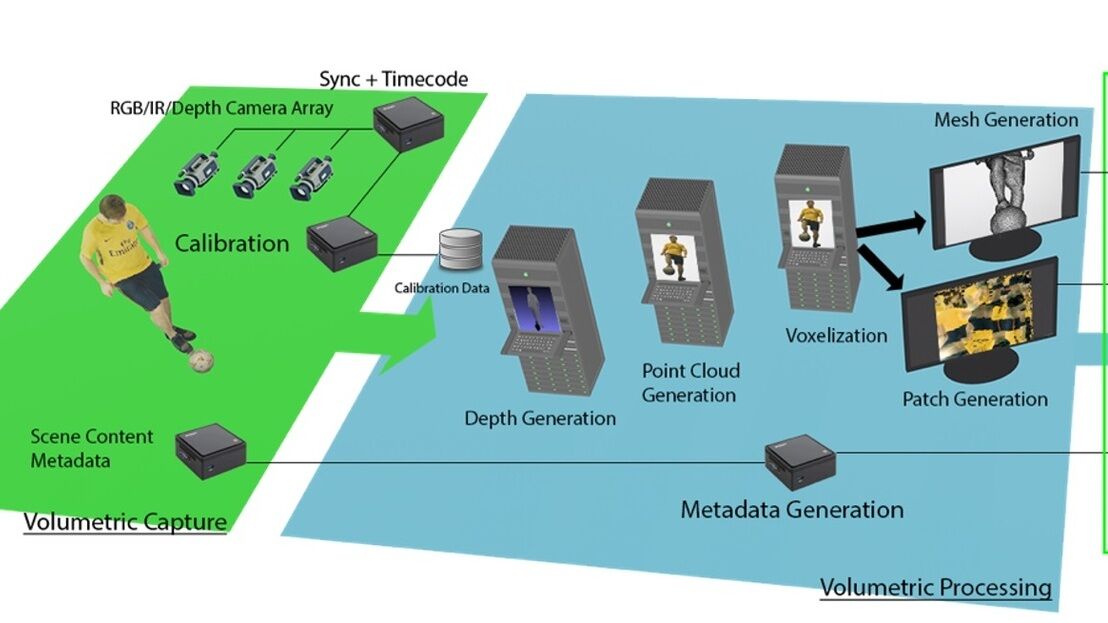

The global demand for highly immersive video content, such as 360-degree videos and dome screen videos, is escalating. Accompanying this demand is an increasing need for cameras capable of capturing wide viewing angles effectively (e.g. panoramic cameras and omnidirectional multi-cameras). Wide-angle videos typically feature subjects exhibiting diverse textures, movements, and brightness on a single screen, requiring image sensors to meet rigorous performance quality, including not only resolution and frame rates exceeding ultra-high definition television levels but also excelling in dynamic range for incident light.

However, developing an image sensor that fulfils all these requirements simultaneously is challenging. Traditional image sensors, such as Complementary Metal Oxide Semiconductor (CMOS) image sensors operating under constant shooting conditions across the entire pixel array, are limited by a trade-off between resolution, frame rate, and the noise performance related to dynamic range (El Desouki et al and Kawahito). Moreover, higher pixel readout rates lead to increased data transfer streams and higher power consumption in image sensors.

In this paper, we present...

Exclusive Content

This article is available with a Technical Paper Pass

Opportunities for emerging 5G and wifi 6E technology in modern wireless production

This paper examines the changing regulatory framework and the complex technical choices now available to broadcasters for modern wireless IP production.

Leveraging AI to reduce technical expertise in media production and optimise workflows

Tech Papers 2025: This paper presents a series of PoCs that leverage AI to streamline broadcasting gallery operations, facilitate remote collaboration and enhance media production workflows.

Automatic quality control of broadcast audio

Tech Papers 2025: This paper describes work undertaken as part of the AQUA project funded by InnovateUK to address shortfalls in automated audio QC processes with an automated software solution for both production and distribution of audio content on premises or in the cloud.

Demonstration of AI-based fancam production for the Kohaku Uta Gassen using 8K cameras and VVERTIGO post-production pipeline

Tech Papers 2025: This paper details a successful demonstration of an AI-based fancam production pipeline that uses 8K cameras and the VVERTIGO post-production system to automatically generate personalized video content for the Kohaku Uta Gassen.

EBU Neo - a sophisticated multilingual chatbot for a trusted news ecosystem exploration

Tech Papers 2025: The paper introduces NEO, a sophisticated multilingual chatbot designed to support a trusted news ecosystem.