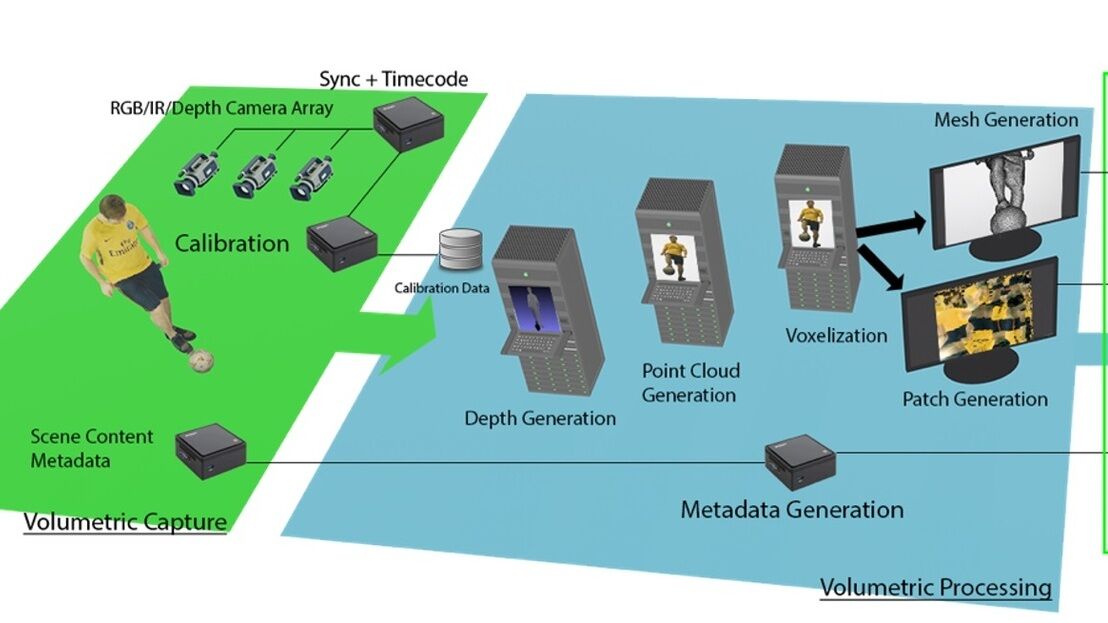

This paper presents approaches the BBC has been trialling for delivering live events into virtual immersive spaces. Trials are being run to explore the use of low-latency volumetric capture technology of the artists, to allow virtual attendees, through their avatars, to interact with the performer and each other. Other trials are looking at the capture of performers in larger spaces such as stages at a festival, relying on 2/2.5D video approaches.

Game-like environments that offer live multiplayer capability are becoming a major form of entertainment. A large community, estimated to be 3.2M in the UK, also spend time in these environments for social & experiential reasons, rather than gaming. Music artists are using these spaces to present virtual concerts, drawing in big crowds and revenue. Broadcasters, as well as major music labels, are starting to look at how to harness games-like media to deliver live music events.

There are many challenges around delivering a live concert into these virtual immersive spaces. For example, Ariana Grande’s 2021 ‘Rift Tour’ in Fortnite relied on pre-generated animated avatars of the performer, making live interaction with the audience unfeasible. Such an approach would also significantly add to the production cost and make a ‘simulcast’ of a broadcast event difficult or impossible.

This paper presents...

Exclusive Content

This article is available with a Technical Paper Pass

Opportunities for emerging 5G and wifi 6E technology in modern wireless production

This paper examines the changing regulatory framework and the complex technical choices now available to broadcasters for modern wireless IP production.

Leveraging AI to reduce technical expertise in media production and optimise workflows

Tech Papers 2025: This paper presents a series of PoCs that leverage AI to streamline broadcasting gallery operations, facilitate remote collaboration and enhance media production workflows.

Automatic quality control of broadcast audio

Tech Papers 2025: This paper describes work undertaken as part of the AQUA project funded by InnovateUK to address shortfalls in automated audio QC processes with an automated software solution for both production and distribution of audio content on premises or in the cloud.

Demonstration of AI-based fancam production for the Kohaku Uta Gassen using 8K cameras and VVERTIGO post-production pipeline

Tech Papers 2025: This paper details a successful demonstration of an AI-based fancam production pipeline that uses 8K cameras and the VVERTIGO post-production system to automatically generate personalized video content for the Kohaku Uta Gassen.

EBU Neo - a sophisticated multilingual chatbot for a trusted news ecosystem exploration

Tech Papers 2025: The paper introduces NEO, a sophisticated multilingual chatbot designed to support a trusted news ecosystem.