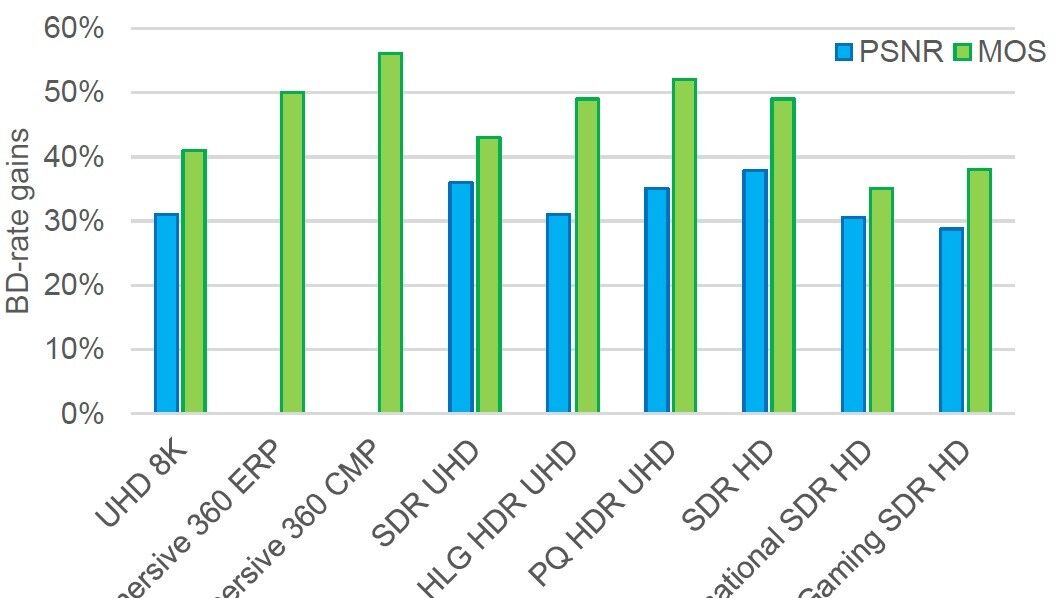

This paper demonstrates that our LMM-based approach not only significantly reduces the computational complexity required for sampling based per-title video encoding—by an astounding 13 times—but also maintains the same level of bitrate saving. These findings not only pave the way for more efficient and adaptive video encoding strategies but also highlight the potential of multi-modal models in enhancing multimedia processing tasks.

In the realm of video encoding, achieving the optimal balance between encoding efficiency and computational complexity remains a formidable challenge. This paper introduces a groundbreaking framework that utilizes a Large Multi-modal Model (LMM) to revolutionize the process of per-title video encoding optimization. By harnessing the predictive capabilities of LMMs, our framework estimates the encoding complexity of video content with unprecedented accuracy, enabling the dynamic selection of encoding configurations tailored to each video’s unique characteristics.

The proposed framework marks a significant departure from traditional per-title encoding methods, which often rely on expensive and time-consuming sampling in the rate-distortion space. Through a comprehensive set of experiments, we demonstrate that our LMM-based approach not only significantly reduces the computational complexity required for sampling based per-title video encoding—by an astounding 13 times—but also maintains the same level of bitrate saving.

The implications of this research...

Exclusive Content

This article is available with a Technical Paper Pass

Opportunities for emerging 5G and wifi 6E technology in modern wireless production

This paper examines the changing regulatory framework and the complex technical choices now available to broadcasters for modern wireless IP production.

Leveraging AI to reduce technical expertise in media production and optimise workflows

Tech Papers 2025: This paper presents a series of PoCs that leverage AI to streamline broadcasting gallery operations, facilitate remote collaboration and enhance media production workflows.

Automatic quality control of broadcast audio

Tech Papers 2025: This paper describes work undertaken as part of the AQUA project funded by InnovateUK to address shortfalls in automated audio QC processes with an automated software solution for both production and distribution of audio content on premises or in the cloud.

Demonstration of AI-based fancam production for the Kohaku Uta Gassen using 8K cameras and VVERTIGO post-production pipeline

Tech Papers 2025: This paper details a successful demonstration of an AI-based fancam production pipeline that uses 8K cameras and the VVERTIGO post-production system to automatically generate personalized video content for the Kohaku Uta Gassen.

EBU Neo - a sophisticated multilingual chatbot for a trusted news ecosystem exploration

Tech Papers 2025: The paper introduces NEO, a sophisticated multilingual chatbot designed to support a trusted news ecosystem.